The Intelligent Interactive Systems Group at Harvard was founded in September of 2009. We design, build and evaluate interactive systems that have some machine intelligence under the hood. This work requires simultaneous innovation in design and computation so we engage a wide range of methods from qualitative research, through design and quantitative controlled experiments, to building new algorithms and implementing working systems.

Some of the things we are are currently working on include:

- human-AI interaction design principles for AI-assisted decision-making

- decision support tools that support contestation and optimize for the welfare of decision subjects

- tools for detecting and quantifying motor impairments

- tools to support humanitarian frontline negotiators

- understanding how to structure collaborations between community organizations and AI technologists such that these collaborations bring real benefits to communities

- and we continue to work on LabintheWild!

If you are interested in joining our group (as a PhD student, a postdoctoral fellow, or an undergraduate researcher), here is some relevant information.

Highlights

May 2, 2025: Zilin Ma defended his PhD dissertation on Designing Intelligent Interactive Systems

for Vulnerable Populations. Over the past 6 years, Zilin focused on understanding and designing technology that benefits vulnerable groups. He analyzed the benefits and perils of LLM chatbots in supporting well-being of LGBTQ+ people; he demonstrated how dating web sites could be redesigned to reduce implicit racial bias when people choose prospective partners; and he developed tools to support front line negotiators.

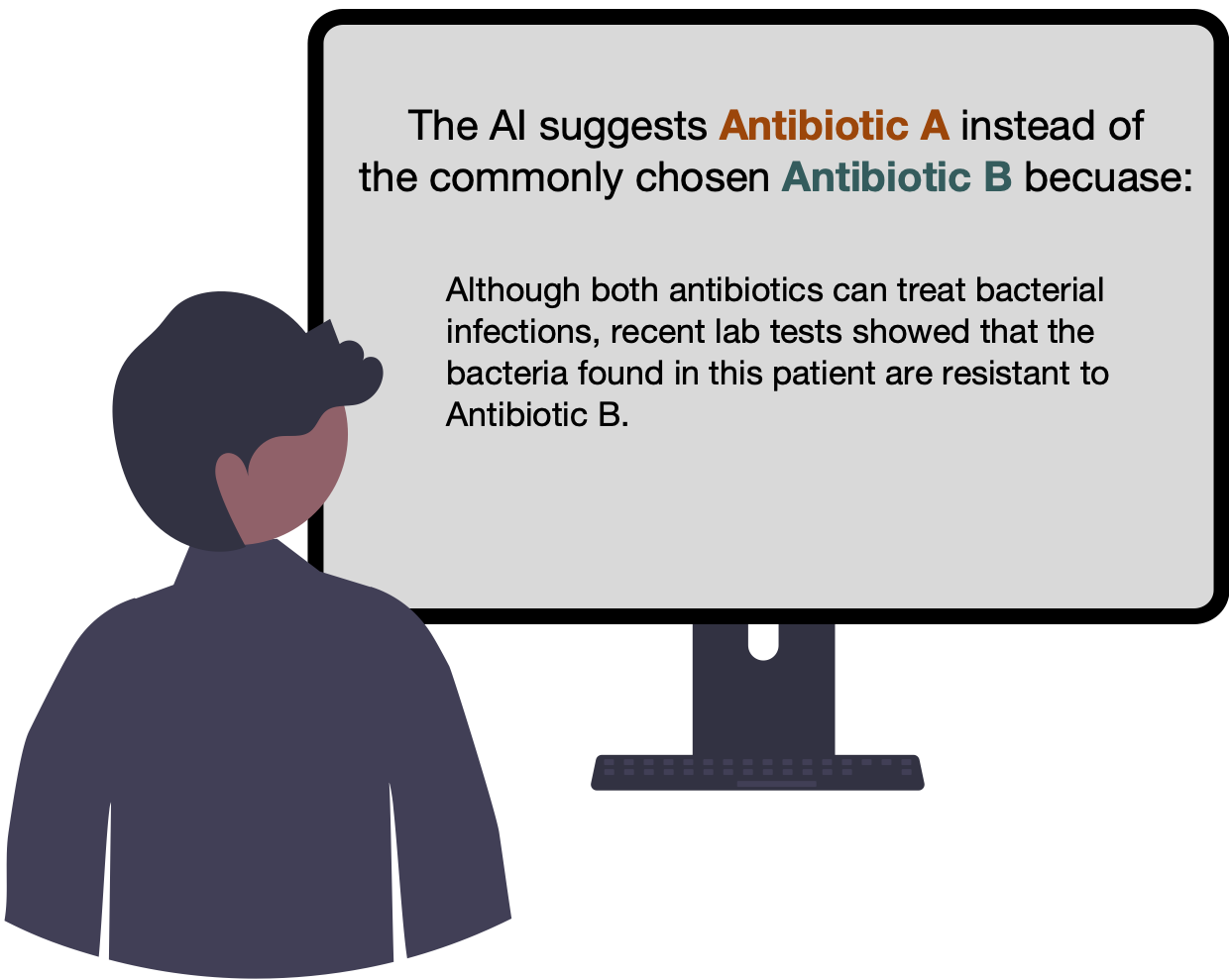

April 28, 2025: Zana’s latest paper shows that people who receive AI decision recommendations supported by contrastive explanations (choose A instead of B because..) help people grow their skills. But this only happens if the alternative (the B in the contrastive explanations) is something that people would plausibly consider. This important because earlier work showed that people do not learn when AI provides conventional explanations (reasons for/against a decision).

April 2, 2025: Zana Buçinca defended her PhD dissertation on Worker-Centric AI for Decision Support. During her PhD, Zana demonstrated that human cognitive engagement moderates the effectiveness of AI support in human decision making, she introduced cognitive forcing functions, and has launched the new sub-field of worker-centric AI. Congratulations!

March 26, 2025: Siddarth Swaroop from DtAK group presented joint work with Zana Buçinca on Personalising AI assistance based on overreliance rate in AI-assisted decision making at IUI’25.

March 25, 2025: Sohini Upadhyay presented her work showing that Counterfactual Explanations May Not Be the Best Algorithmic Recourse Approach at IUI’25.

Dec 6, 2024: Advice we give when professional colleagues tell us that their organization urgently needs to adopt AI: Keep calm and carry on, with or without AI.

Nov 12, 2024: Hongjin presented a paper on Centering Community Organizations in Artificial Intelligence for Social Good Partnerships at CSCW’24! We also have a blog post summarizing the key points.